- cross-posted to:

- technology@lemmy.ml

- cross-posted to:

- technology@lemmy.ml

Previous posts: https://programming.dev/post/3974121 and https://programming.dev/post/3974080

Original survey link: https://forms.gle/7Bu3Tyi5fufmY8Vc8

Thanks for all the answers, here are the results for the survey in case you were wondering how you did!

Edit: People working in CS or a related field have a 9.59 avg score while the people that aren’t have a 9.61 avg.

People that have used AI image generators before got a 9.70 avg, while people that haven’t have a 9.39 avg score.

One thing I’d be interested in is getting a self assessment from each person regarding how good they believe themselves to have been at picking out the fakes.

I already see online comments constantly claiming that they can “totally tell” when an image is AI or a comment was chatGPT, but I suspect that confirmation bias plays a big part than most people suspect in how much they trust a source (the classic “if I agree with it, it’s true, if I don’t, then it’s a bot/shill/idiot”)

With the majority being in CS fields and having used ai image generation before they likely would be better at picking out than the average person

You’d think, but according to OP they were basically the same, slightly worse actually, which is interesting

The ones using image generation did slightly better

I was more commenting it to point out that it’s not necessary to find that person who can totally tell because they can’t

Even when you know what you are looking for, you are basically pixel hunting for artifacts or other signs that show it’s AI without the image actually looking fake, e.g. the avocado one was easy to tell, as ever since DALLE1 avocado related things have been used as test images, the https://thispersondoesnotexist.com/ one was obvious due to how it was framed and some of the landscapes had that noise-vegetation-look that AI images tend to have. But none of the images look fake just by themselves, if you didn’t specifically look for AI artifacts, it would be impossible to tell the difference or even notice that there is anything wrong with the image to begin with.

Right? A self-assessed skill which is never tested is a funny thing anyways. It boils down to “I believe I’m good at it because I believe my belief is correct”. Which in itself is shady, but then there are also incentives that people rather believe to be good, and those who don’t probably rather don’t speak up that much. Personally, I believe people lack the competence to make statements like these with any significant meaning.

Did you not check for a correlation between profession and accuracy of guesses?

I have. Disappointingly there isn’t much difference, the people working in CS have a 9.59 avg while the people that aren’t have a 9.61 avg.

There is a difference in people that have used AI gen before. People that have got a 9.70 avg, while people that haven’t have a 9.39 avg score. I’ll update the post to add this.

deleted by creator

mean SD

No 9.40 2.27

Yes 9.74 2.30Definitely no.

I would say so, but the sample size isn’t big enough to be sure of it.

So no. For a result to be “statistically significant” the probability that it can be the result of noise/randomness has to be below a given threshold. Few if any things will ever be “100% sure.”

Can we get the raw data set? / could you make it open? I have academic use for it.

Sure, but keep in mind this is a casual survey. Don’t take the results too seriously. Have fun: https://docs.google.com/spreadsheets/d/1MkuZG2MiGj-77PGkuCAM3Btb1_Lb4TFEx8tTZKiOoYI

Do give some credit if you can.

Of course! I’m going to find a way to integrate this dataset into a class I teach.

If I can be a bother, would you mind adding a tab that details which images were AI and which were not? It would make it more usable, people could recreate the values you have on Sheet1 J1;K20

Done, column B in the second sheet contains the answers (Yes are AI generated, No aren’t)

Awesome! Thanks very much.

One thing I’m not sure if it skews anything, but technically ai images are curated more than anything, you take a few prompts, throw it into a black box and spit out a couple, refine, throw it back in, and repeat. So I don’t know if its fair to say people are getting fooled by ai generated images rather than ai curated, which I feel like is an important distinction, these images were chosen because they look realistic

Technically you’re right but the thing about AI image generators is that they make it really easy to mass-produce results. Each one I used in the survey took me only a few minutes, if that. Some images like the cat ones came out great in the first try. If someone wants to curate AI images, it takes little effort.

So if the average is roughly 10/20, that’s about the same as responding randomly each time, does that mean humans are completely unable to distinguish AI images?

In theory, yes. In practice, not necessarily.

I found that the images were not very representative of typical AI art styles I’ve seen in the wild. So not only would that render preexisting learned queues incorrect, it could actually turn them into obstacles to guessing correctly pushing the score down lower than random guessing (especially if the images in this test are not randomly chosen, but are instead actively chosen to dissimulate typical AI images).

I would also think it depends on what kinds of art you are familiar with. If you don’t know what normal pencil art looks like, how are ya supposed to recognize the AI version.

As an example, when I’m browsing certain, ah, nsfw art, I can recognize the AI ones no issue.

If you look at the ratios of each picture, you’ll notice that there are roughly two categories: hard and easy pictures. Based on information like this, OP could fine tune a more comprehensive questionnaire to include some photos that are clearly in between. I think it would be interesting to use this data to figure out what could make a picture easy or hard to identify correctly.

My guess is that a picture is easy if it has fingers or logical structures such as text, railways, buildings etc. while illustrations and drawings could be harder to identify correctly. Also, some natural structures such as coral, leaves and rocks could be difficult to identify correctly. When an AI makes mistakes in those areas, humans won’t notice them very easily.

The number of easy and hard pictures was roughly equal, which brings the mean and median values close to 10/20. If you want to bring that value up or down, just change the number of hard to identify pictures.

It depends on if these were hand picked as the most convincing. If they were, this can’t be used a representative sample.

But you will always hand pick generated images. It’s not like you hit the generate button once and call it a day, you hit it dozens of times tweaking it until you get what you want. This is a perfectly representative sample.

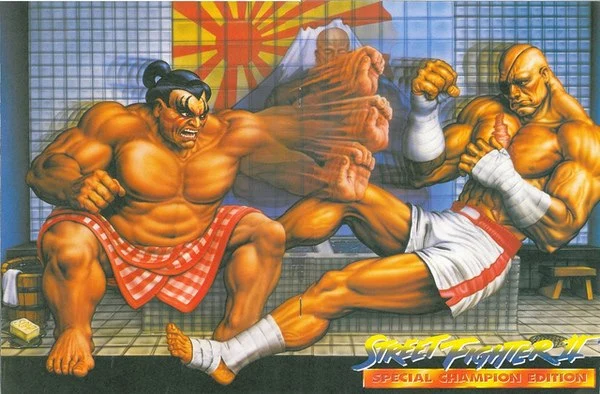

As a personal example, this is what I generated and after like few hours of tweaking, regenerating and inpainting, this was the final result. And here’s another: initial generation, the progress animation, and end result.

Are they perfect, no, but the really obvious bad AI art comes from people who expect it to spit perfect images at you.

Personally, I’m not surprised. I thought a 3D dancing baby was real.

From this particular set, at least. Notice how people were better at guessing some particular images.

Stylized and painterly images seem particularly hard to differentiate.

Having used stable diffusion quite a bit, I suspect the data set here is using only the most difficult to distinguish photos. Most results are nowhere near as convincing as these. Notice the lack of hands. Still, this establishes that AI is capable of creating art that most people can’t tell apart from human made art, albeit with some trial and error and a lot of duds.

Idk if I’d agree that cherry picking images has any negative impact on the validity of the results - when people are creating an AI generated image, particularly if they intend to deceive, they’ll keep generating images until they get one that’s convincing

At least when I use SD, I generally generate 3-5 images for each prompt, often regenerating several times with small tweaks to the prompt until I get something I’m satisfied with.

Whether or not humans can recognize the worst efforts of these AI image generators is more or less irrelevant, because only the laziest deceivers will be using the really obviously wonky images, rather than cherry picking

AI is only good at a subset of all possible images. If you have images with multiple people, real world products, text, hands interacting with stuff, unusual posing, etc. it becomes far more likely that artifacts slip in, often times huge ones that are very easy to spot. For example even DALLE-3 can’t generate a realistic looking N64. It will generate something that looks very N64’ish and gets the overall shape right, but is wrong in all the little details, the logo is distorted, the ports have the wrong shape, etc.

If you spend a lot of time inpainting and manually adjusting things, you can get rid of some of the artifacts, but at that point you aren’t really AI generating images anymore, but just using AI as source for photoshopping. If you just using AI and pick the best images, you will end up with a collection of images that all look very AI’ish, since they will all feature very similar framing, posing, layout, etc. Even so no individual image might not look suspicious by themselves, when you have a large number of them they always end up looking very similar, as they don’t have the diversity that human made images have and don’t have the temporal consistency.

These images were fun, but we can’t draw any conclusions from it. They were clearly chosen to be hard to distinguish. It’s like picking 20 images of androgynous looking people and then asking everyone to identify them as women or men. The fact that success rate will be near 50% says nothing about the general skill of identifying gender.

Wow, what a result. Slight right skew but almost normally distributed around the exact expected value for pure guessing.

Assuming there were 10 examples in each class anyway.

It would be really cool to follow up by giving some sort of training on how to tell, if indeed such training exists, then retest to see if people get better.

Imo, 3,17,18 were obviously AI imo (based on what I’ve seen from AI art generators in the past*). But whatever original art those are based on, I’d probably also flag as obviously AI. The rest I was basically guessing at random. Especially the sketches.

*I never used AI generators myself, but I’ve seen others do it on stream. Curious how many others like me are raising the average for the “people that haven’t used AI image generators” before.

I’d be curious about 18, what makes it obviously generated for you? Out of the ones not shown in the result, I got most right but not this one.

The arm rest seamlessly and senselessly blends into the rest of the couch.

Thank you. I’m not sure how I missed that.

Seen a lot of very similar pictures generated via midjourney. Mostly goats fused with the couch.

I was legitimately surprised by the man on a bench being human-made. His ankle is so thin! The woman in a bar/restaurant also surprised me because of her tiny finger.

Sketches are especially hard to tell apart because even humans put in extra lines and add embellishments here and there. I’m not surprised more than 70% of participants weren’t able to tell that one was generated.

Curious which man made image was most likely to be classified as ai generated

and

About 20% got those correct as human-made.

That’s the same image twice?

Thanks. Fixed.

My first impression was “AI” when I saw them, but I figured an AI would have put buildings on the road in the town and the 2nd one was weird but that parts fit together well enough.

I think it’s because of the shadow on the ground being impossible

deleted by creator

Something I’d be interested in is restricting the “Are you in computer science?” question to AI related fields, rather than the whole of CS, which is about as broad a field as social science. Neural networks are a tiny sliver of a tiny sliver

Especially depending on the nation or district a person lives in, where CS can have even broader implications like everything from IT Support to Engineering.

It’d be interesting to also see the two non-AI images the most people thought were.

Thank you so much for sharing the results. Very interesting to see the outcome after participating in the survey.

Out of interest: do you know how many participants came from Lemmy compared to other platforms?

No idea, but I would assume most results are from here since Lemmy is where I got the most attention and feedback.

I’m found the survey through tumblr, i expect there’s quite a few from there.

Oh nice, do you have a link for where it was posted?

i’ll see if i can find it, but my dashboard hits 99+ notification in about six hours…

What I learnt is that I’m bad at that task.

I got a 17/20, which is awesome!

I’m angry because I could’ve gotten an 18/20 if I’d paid attention to the thispersondoesnotexists’ glasses, which in hindsight, are clearly all messed up.

I did guess that one human-created image was made by AI, “The End of the Journey”. I guessed that way because the horses had unspecific legs and no tails. And also, the back door of the cart they were pulling also looked funky. The sky looked weirdly detailed near the top of the image, and suddenly less detailed near the middle. And it had birds at the very corner of the image, which was weird. I did notice the cart has a step-up stool thing attached to the door, which is something an AI likely wouldn’t include. But I was unsure of that. In the end, I chose wrong.

It seems the best strategy really is to look at the image and ask two questions:

- what intricate details of this image are weird or strange?

- does this image have ideas indicate thought was put into them?

About the second bullet point, it was immediately clear to me the strawberry cat thing was human-made, because the waffle cone it was sitting in was shaped like a fish. That’s not really something an AI would understand is clever.

One the tomato and avocado one, the avocado was missing an eyebrow. And one of the leaves of the stem of the tomato didn’t connect correctly to the rest. Plus their shadows were identical and did not match the shadows they would’ve made had a human drawn them. If a human did the shadows, it would either be 2 perfect simplified circles, or include the avocado’s arm. The AI included the feet but not the arm. It was odd.

The anime sword guy’s armor suddenly diverged in style when compared to the left and right of the sword. It’s especially apparent in his skirt and the shoulder pads.

The sketch of the girl sitting on the bench also had a mistake: one of the back legs of the bench didn’t make sense. Her shoes were also very indistinct.

I’ve not had a lot of practice staring at AI images, so this result is cool!

does this image have ideas indicate thought was put into them?

I got fooled by the bright mountain one. I assumed it was just generic art vomit a la Kinkade

About the second bullet point, it was immediately clear to me the strawberry cat thing was human-made, because the waffle cone it was sitting in was shaped like a fish. That’s not really something an AI would understand is clever.

It’s a (Taiyaki cone)[https://japancrate.com/cdn/shop/articles/275b4c92-2649-4e94-896f-4bc3ce4acbad_1200x1200.png?v=1678715822], something that already exists. Wouldn’t be too hard to get AI to replicate it, probably.

I personally thought the stuff hanging on the side was oddly placed and got fooled by it.

You put ‘tell’ twice in the title 😅

God DAMN it

I guess I should feel good with my 12/20 since it’s better than average.